Monitoring Social Media activity during elections meets a right to research

Vukosi Marivate and Seani Rananga touch on the challenges of monitoring social media acticity for election misinformation in 2024

The year 2024 is significant for democracies worldwide, with numerous important elections happening across South Africa, the African continent and globally. Alongside this, there’s a growing concern about how Artificial Intelligence (AI) might affect the integrity of these elections. At the Data Science for Social Impact (DSFSI) research group, our work mainly involves creating AI systems. However, we’re increasingly worried not just about AI’s role in spreading false information but about the broader challenge of monitoring social media to spot misinformation.

This challenge has grown due to major changes in how we can access data from social media platforms. These changes have been driven by new business models, including the monetization of Large Language Models, which have led platforms to start charging for data access, a change that affects even researchers. Additionally, the tools available for analysing social media data have become less functional, and there’s been a shift towards using marketing tools for such analyses.

These developments make it hard for researchers to keep track of misinformation on social media, a crucial task for maintaining election integrity. It’s vital for the public to be aware of these issues. There’s a need for better policies in Africa and elsewhere to ensure that researchers can access the data they need to help protect democracies in the digital age.

Living the post-Cambridge Analytica world

In 2018, it was revealed that Cambridge Analytica, a political consulting firm, had accessed the personal data of millions of Facebook users without their consent. This data was collected via a third-party application, which also gathered information from the users’ network of friends on Facebook. The firm used this data to develop targeted political advertising campaigns during significant political events such as the 2016 U.S. presidential election and the Brexit referendum. The incident highlighted critical issues regarding user privacy, data ethics, and the potential impact of data misuse on democracy.

In response to the scandal, Facebook/Meta significantly tightened its data access policies via their APIs, affecting not only commercial users but also the academic research community. This move has been widely criticised and identified as a considerable challenge for scholars and researchers who depend on social media data for their work. The extent of the issue and its implications for academic research are thoroughly examined in this article which provides an insightful perspective. The ultimate concern was that with more restrictions we collectively as society even have less tools to monitor if we had bad actors on such platforms. Something that cannot be left to platforms to do on their own. For example, platforms have been shown to fall short in moderating content in indigenous languages.

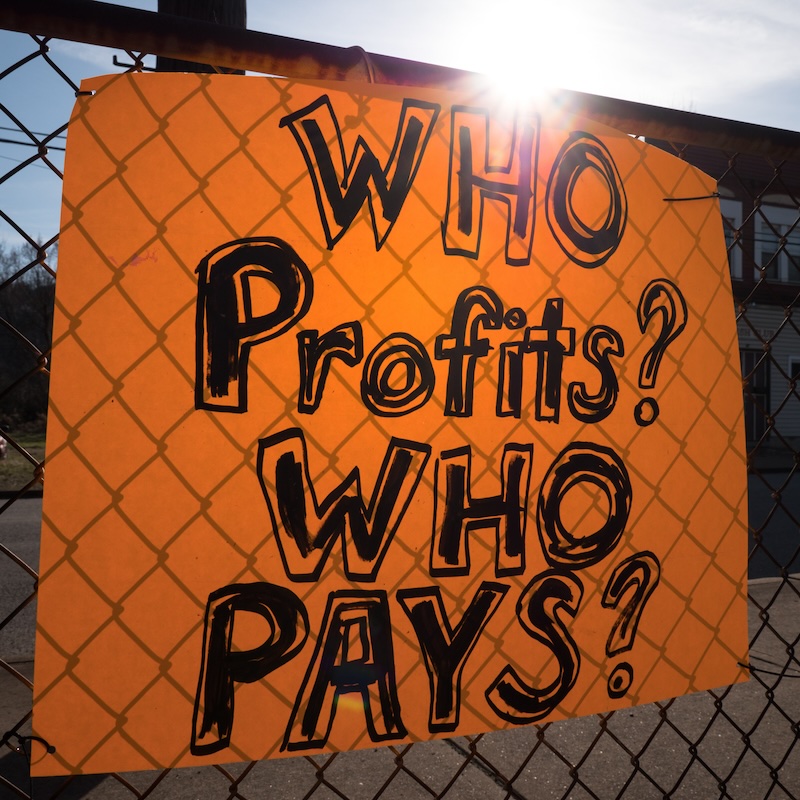

30 November 2022: an LLM Revolution and a question of who pays for the internet

On 30 November 2022, the release of the large language model ChatGPT marked a pivotal moment in the digital economy, raising critical questions about the funding model of the internet. This development, alongside the emergence of other commercial large language models (LLMs), prompted social media platforms to reassess their monetization strategies. Historically, these platforms provided relatively open access to their APIs, enabling the creation of third-party enhancements and data processing, underpinned by the platforms’ reliance on advertising for revenue. However, academic researchers, who previously had access to research APIs like Twitter’s (concerns of restrictions were already raised during its 2022 acquisition, faced challenges. The advent of LLMs, which often utilise data scraped from these platforms, led to a significant shift. In response, platforms began to limit access and introduce charges for data usage, especially for entities developing LLMs, reflecting a transformation in the economic models of social media, from solely ad-based revenue to direct data monetization. This shift has significantly impacted academic research, as the financial barriers to accessing necessary data have increased dramatically.

Using Marketing Tools for research?

Prior to mid-2023, researchers engaged in approved projects could access up to 10 million Twitter posts per month at no cost through the platform’s research program. However, this program has been discontinued, shifting to a model where access to Twitter’s data is only available through a paid API, with costs amounting to 100 USD for 10,000 posts per month. For instance, our election monitoring project in 2021 (https://dsfsi.github.io/zaelection2021/){:target=”_blank”}, which gathered 700,000 Twitter posts, would now incur expenses exceeding 7,000 USD (over 130,000 ZAR) solely for data collection. This shift towards paid access has significantly hampered the efforts of both academic researchers and journalists, as highlighted by a discussion on CJR. In the face of these financial barriers, researchers have begun to rely on marketing tools like Brandwatch and Meltwater to conduct research, despite these also being paid services offering the capability to analyse social media content.

Wider Implications

The consequences of restricted data access extend well beyond the realm of misinformation, significantly impacting research in areas like linguistics and low resource natural language processing. Social media platforms, once fertile grounds for linguistic studies, particularly concerning local and indigenous languages, now present challenges for researchers due to limited access. This limitation jeopardises the study and preservation of many local languages. For instance, projects such as AfriHate, which focuses on identifying hate speech in local languages and developing automated detection models, and AfriSenti, aimed at understanding sentiments expressed in African languages, face difficulties in expanding their research to cover more languages. Moreover, the restricted access hinders the ability to collect valuable linguistic data from public broadcasters like the SABC, which often use local languages on their social media platforms, despite their primary website content being predominantly in English.

Read more about our work on battling misinformation

- Combating Online Misinformation @ Data Science for Social Impact https://dsfsi.github.io/blog/combating-misinformation/

- Insights from the Multi-Sectoral Workshop on Battling Misinformation Ahead of the 2024 South African Elections https://dsfsi.github.io/blog/battling-misinformation-workshop-2024/

Image modified from https://www.flickr.com/photos/9602574@N02/32547971877/